Public Member Functions | |

| InferenceEngine (const std::string &model_path, float update_ratio=0.1f, float confidence_threshold=0.6f, size_t history_size=5, size_t min_consensus_votes=3) | |

| Constructor for the InferenceEngine. | |

| void | RecordData (int duration, const char *prefix) |

| void | InferenceTask () |

| void | OnData (const Type::Vector3 &accel, const Type::Vector3 &gyro, const Type::Eulr &eulr) |

| void | RegisterDataCallback (const std::function< void(ModelOutput)> &callback) |

| void | RunUnitTest () |

Private Member Functions | |

| void | CollectSensorData () |

| ModelOutput | RunInference (std::vector< float > &input_data) |

| Runs inference on the collected sensor data. | |

| template<typename T > | |

| std::string | VectorToString (const std::vector< T > &vec) |

Private Attributes | |

| Ort::Env | env_ |

| Ort::SessionOptions | session_options_ |

| Ort::Session | session_ |

| Ort::AllocatorWithDefaultOptions | allocator_ |

| std::vector< std::string > | input_names_ |

| std::vector< const char * > | input_names_cstr_ |

| std::vector< int64_t > | input_shape_ |

| size_t | input_tensor_size_ |

| std::vector< std::string > | output_names_ |

| std::vector< const char * > | output_names_cstr_ |

| std::vector< int64_t > | output_shape_ |

| std::deque< float > | sensor_buffer_ |

| std::deque< ModelOutput > | prediction_history_ |

| float | confidence_threshold_ |

| size_t | history_size_ |

| size_t | min_consensus_votes_ |

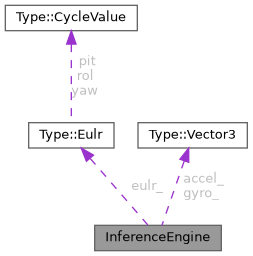

| Type::Eulr | eulr_ {} |

| Type::Vector3 | gyro_ {} |

| Type::Vector3 | accel_ {} |

| std::function< void(ModelOutput)> | data_callback_ |

| std::binary_semaphore | ready_ |

| std::thread | inference_thread_ |

| int | new_data_number_ |

Detailed Description

Definition at line 53 of file comp_inference.hpp.

Constructor & Destructor Documentation

◆ InferenceEngine()

|

inlineexplicit |

Constructor for the InferenceEngine.

- Parameters

-

model_path Path to the ONNX model file. update_ratio Ratio for updating the sensor buffer. confidence_threshold Minimum probability required to accept a prediction. history_size Number of past predictions stored for voting. min_consensus_votes Minimum votes required to confirm a prediction.

Definition at line 65 of file comp_inference.hpp.

Member Function Documentation

◆ CollectSensorData()

|

inlineprivate |

Definition at line 244 of file comp_inference.hpp.

◆ InferenceTask()

|

inline |

Definition at line 166 of file comp_inference.hpp.

◆ OnData()

|

inline |

Definition at line 195 of file comp_inference.hpp.

◆ RecordData()

|

inline |

Definition at line 129 of file comp_inference.hpp.

◆ RegisterDataCallback()

|

inline |

Definition at line 203 of file comp_inference.hpp.

◆ RunInference()

|

inlineprivate |

Runs inference on the collected sensor data.

- Parameters

-

input_data Vector containing preprocessed sensor data.

- Returns

- The predicted motion category as a string label.

Definition at line 266 of file comp_inference.hpp.

◆ RunUnitTest()

|

inline |

Definition at line 207 of file comp_inference.hpp.

◆ VectorToString()

|

inlineprivate |

Definition at line 320 of file comp_inference.hpp.

Field Documentation

◆ accel_

|

private |

Definition at line 358 of file comp_inference.hpp.

◆ allocator_

|

private |

Definition at line 332 of file comp_inference.hpp.

◆ confidence_threshold_

|

private |

Definition at line 349 of file comp_inference.hpp.

◆ data_callback_

|

private |

Definition at line 361 of file comp_inference.hpp.

◆ env_

|

private |

Definition at line 329 of file comp_inference.hpp.

◆ eulr_

|

private |

Definition at line 356 of file comp_inference.hpp.

◆ gyro_

|

private |

Definition at line 357 of file comp_inference.hpp.

◆ history_size_

|

private |

Definition at line 351 of file comp_inference.hpp.

◆ inference_thread_

|

private |

Definition at line 365 of file comp_inference.hpp.

◆ input_names_

|

private |

Definition at line 335 of file comp_inference.hpp.

◆ input_names_cstr_

|

private |

Definition at line 336 of file comp_inference.hpp.

◆ input_shape_

|

private |

Definition at line 337 of file comp_inference.hpp.

◆ input_tensor_size_

|

private |

Definition at line 338 of file comp_inference.hpp.

◆ min_consensus_votes_

|

private |

Definition at line 353 of file comp_inference.hpp.

◆ new_data_number_

|

private |

Definition at line 366 of file comp_inference.hpp.

◆ output_names_

|

private |

Definition at line 340 of file comp_inference.hpp.

◆ output_names_cstr_

|

private |

Definition at line 341 of file comp_inference.hpp.

◆ output_shape_

|

private |

Definition at line 342 of file comp_inference.hpp.

◆ prediction_history_

|

private |

Definition at line 346 of file comp_inference.hpp.

◆ ready_

|

private |

Definition at line 364 of file comp_inference.hpp.

◆ sensor_buffer_

|

private |

Definition at line 345 of file comp_inference.hpp.

◆ session_

|

private |

Definition at line 331 of file comp_inference.hpp.

◆ session_options_

|

private |

Definition at line 330 of file comp_inference.hpp.

The documentation for this class was generated from the following file:

- src/component/comp_inference.hpp